Leveraging Data Platforms for Better ESG Reporting and Sustainability

Environmental, social, and governance (ESG) factors have become crucial considerations for businesses worldwide. ESG reporting enable companies to measure and communicate their impact on the environment and society, while ensuring good governance practices. To improve their ESG performance, more and more companies are turning to data platforms which allow them to collect, analyse, and report […]

Unlock the Power of Data with TechConnect and GDPR Compliance

What Is GDPR? The General Data Protection Regulation (GDPR) is an EU legislation enacted in May 2018 that establishes data protection as a fundamental right to UK and EU based users. It includes numerous provisions covering the use, storage, confidentiality, and transfer of personal data. This law ensures that privacy remains a priority, no matter […]

Why should you migrate to cloud?

Migrating to the cloud can be a daunting task for any organisation. There are a variety of factors to consider, including which applications to move, how to move them, and what type of cloud platform to use. Cloud migrations are becoming increasingly important as more businesses move to the cloud. There are many reasons for […]

The Importance of Data in the Business World

In today’s business world, data is more important than ever before. With the advent of big data and data analytics, businesses have been able to gain insights into their operations that were previously unavailable. By understanding their data, businesses can make better decisions, improve their products and services, and find new opportunities for growth. Here’s […]

7 Steps to becoming a more Data-Driven company

Getting the best use from your data

Migrating Relational Data into an Amazon S3 Data Lake

The concept of a data lake is not new, but with the proliferation and adoption of cloud providers the capacity for many companies to adopt the model has exploded. A data lake is a centralised store for all kinds of business data: unstructured – images, videos, PDFs, Word documents semi-structured – JSON, XML, spreadsheets structured – CSVs, RDBMS […]

Digital Transformation with Data

Harnessing Data to drive effective digital transformation The COVID-19 pandemic has made clear that businesses need to be prepared for flexible, remote working practices. As lockdowns forced offices to close and people headed home to limit the potential spread of the virus, many organisations found they weren’t prepared to provision the necessary work from home […]

What’s the difference between Artificial Intelligence (AI) & Machine Learning (ML)?

What’s the difference between Artificial Intelligence (AI) & Machine Learning (ML)? The field of Artificial Intelligence encompasses all efforts at imbuing computational devices with capabilities that have traditionally been viewed as requiring human-level intelligence. This includes: Chess, go and generalised game playing Planning and goal-directed behaviour in dynamic and complex environments Theorem proving, proof assistants and […]

Machine Learning with Amazon SageMaker

Computers are generally programmed to do what the developer dictates and will only behave predictably under the specified scenarios. In recent years, people are increasingly turning to computers to perform tasks that can’t be achieved with traditional programming, which previously had to be done by humans performing manual tasks. Machine Learning gives computers the ability […]

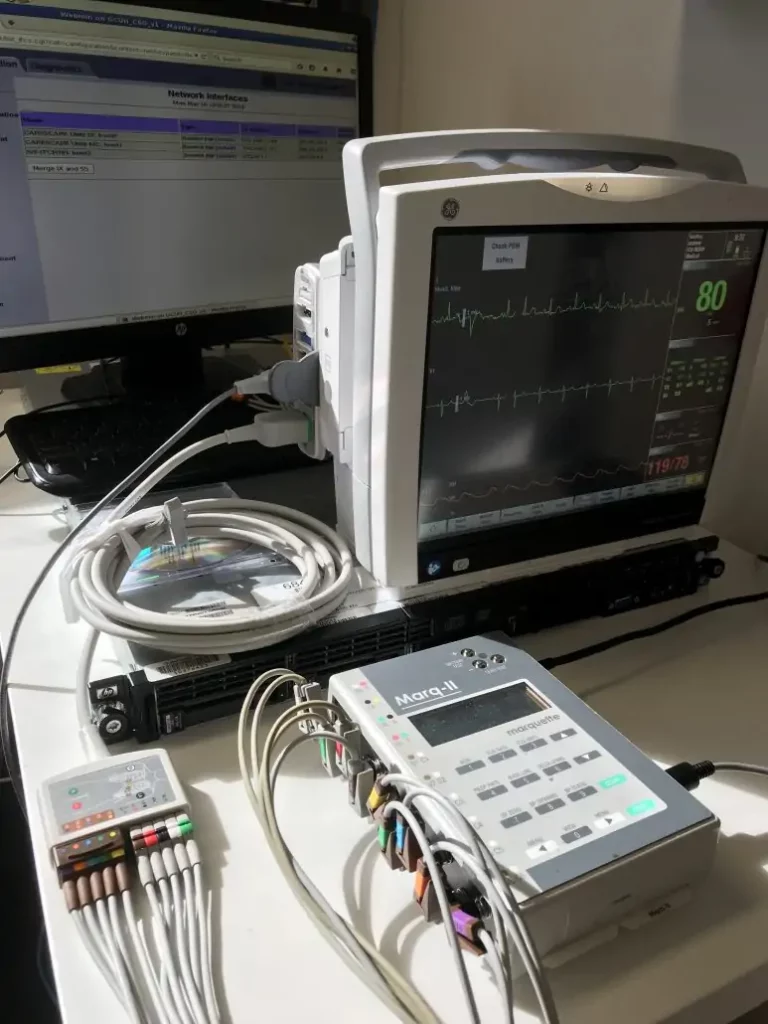

Precision Medicine Data Platform

Recently TechConnect and IntelliHQ attended the eHealth Expo 2018. IntelliHQ are specialists in Machine Learning in the health space, and are the innovators behind the development of a cloud-based precision medicine data platform. TechConnect are IntelliHQ’s cloud technology partners, and our strong relationship with Amazon Web Services and the AWS life sciences team has enabled […]