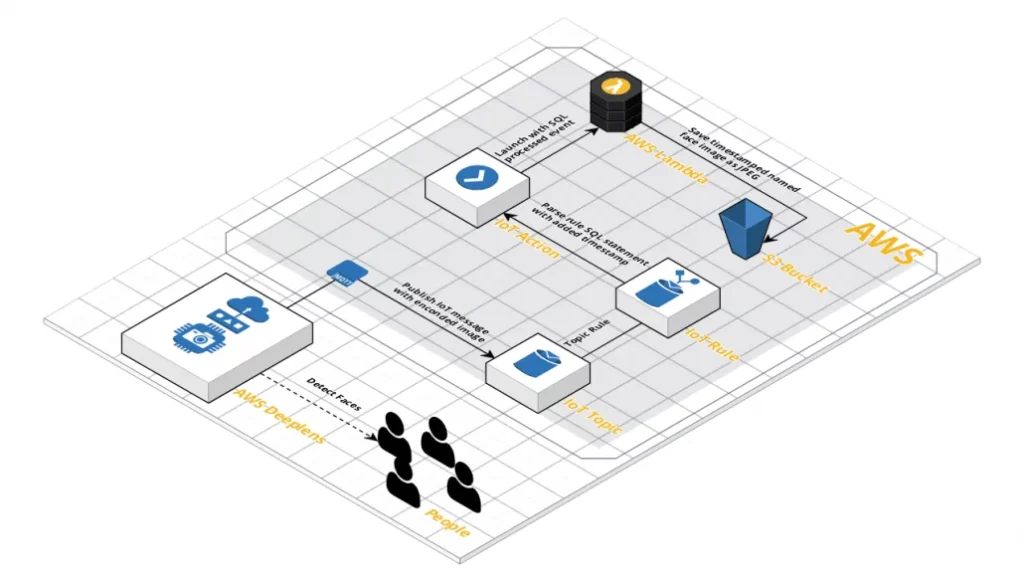

AWS DeepLens: Creating an IoT Rule (Part 2 of 2)

This post is the second in a series on getting started with the AWS DeepLens. In Part 1, we introduced a program that could detect faces and crop them by extending the boilerplate Greengrass Lambda and pre-built model provided by AWS. This focussed on the local capabilities of the device, but the DeepLens device is […]

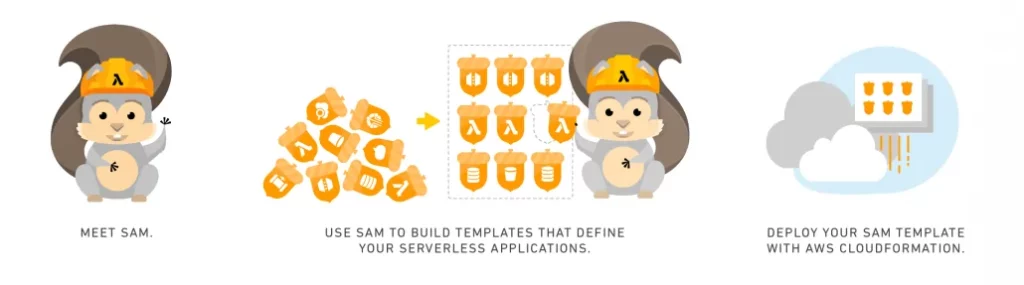

Using AWS SAM for a CORS Enabled Serverless API

Over the past two years TechConnect has had an increasing demand for creating “Serverless” API backends, from scratch or converting existing services running on expensive virtual machines in AWS. This has been an iterative learning process for us and I feel many others in the industry. However, it feels like each month pioneers in the […]